Microsoft 💘 Open Source - Microsoft makes it easier to build scalable platforms

Learn how Microsoft is making it easier to build scalable platforms through Kubernetes, containerization, open-sourcing Azure Functions runtime and contributing to open standards.

In our previous blog post, we've have discussed the challenges of hosting open-source technologies yourself and what responsibilities you have. Luckily Azure makes this a lot easier.

This article is part of the Microsoft 💘 Open Source blog post series:

- Microsoft’s journey to open source (link)

- Azure makes it easy to run open-source products (link)

- Microsoft makes it easier to build scalable platforms (this post)

- Giving back to open source (link)

Over the past years, Microsoft has been open-sourcing some of their own products and tools to help customers make it easier to build apps & platforms.

In this blog post, we will have a closer look at how Microsoft is helping.

We will mainly focus on the cloud-native space but it goes beyond that with projects such as VS Code, Xamarin, and more.

As I’ve mentioned in the first blog post, I personally think that the Deis acquisition and hiring of Brendan Burns are two key milestones that were fundamental to Microsoft’s involvement in the Cloud-Native & Kubernetes space.

How are Microsoft & Azure helping?

Azure has embraced Kubernetes and made it one of the key services of its cloud offering, helping customers use Kubernetes and aiming to simplify things. To achieve this, it starts with all the contributions that Microsoft has been making.

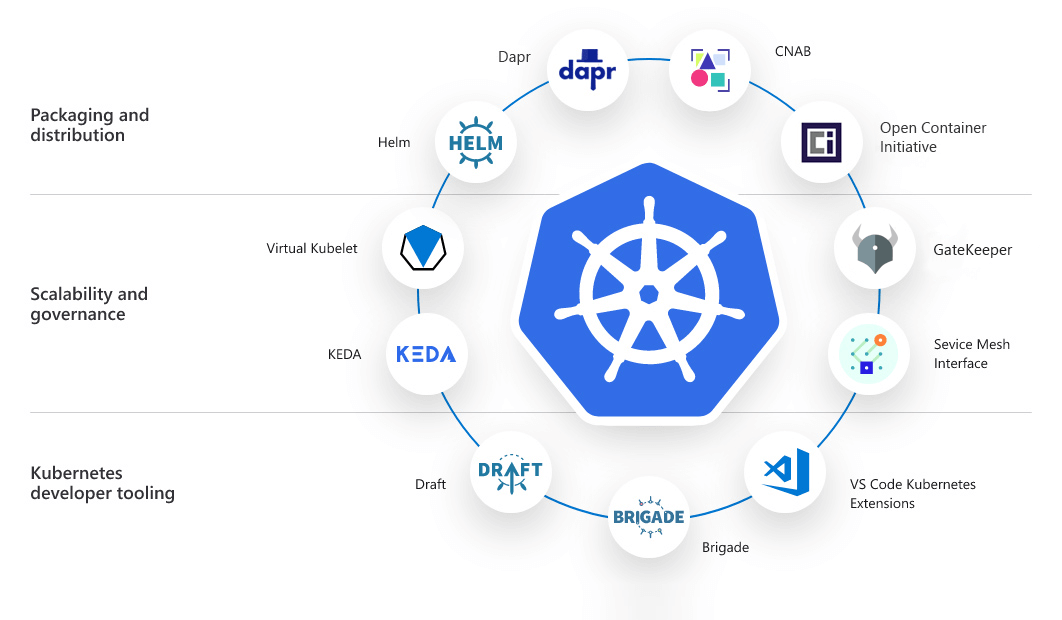

The upstream open-source team plays a big role in this that has been involved with Kubernetes itself, but also started a wide range of projects such as Virtual Kubelet, Helm, Open Policy Agent Gatekeeper, Service Mesh Interface and more!

The Azure CTO Incubation team has been extending Kubernetes aiming to simplify the development of apps on Kubernetes with projects such as KEDA (collaboration with Azure Functions), Dapr, and the Open Application Model (OAM).

Clemens Vasters from Azure Messaging has been one of the main drivers behind the CloudEvents specification (and a lot more open specs such as AMQP, OPC UA), and Azure Container Registry / Microsoft Container Registry team is heavily involved in the Open Container Initiative.

And there is more, but Azure provides a nice overview of their ongoing involvements:

In parallel, we have teams such as Azure Functions that have just celebrated their 5th anniversary:

5 years old today! Thanks to our amazing community who’s made the last 5 years incredible, and can’t wait for what’s to come 🎂🥳🎉

— Azure Functions (@AzureFunctions) March 31, 2021

So how’re you going to celebrate? 🎁

What’s unique about it is that they are one of the first, and maybe very few, Azure services that have fully open-sourced their runtime and started building other offerings on top of it such as Azure Logic Apps.

But it goes without saying that I’m only scratching the surface here and there are a lot more great involvements and projects that I did not mention. I highly recommend going to the overview of the open-source project to get an idea.

Improving Azure by relying on open source projects & standards

So how do all these open source contributions help Azure customers?

Embrace & extend instead of re-invent

In the early days of Microsoft, the strategy was to embrace, extend & extinguish but this has drastically changed to embracing open-source technologies and extending it, instead of re-inventing it.

Kubernetes is one of them. Instead of building a container orchestrator and trying to compete with it, it has just adopted it and made it a great place to use it on Microsoft Azure!

"Well, hello - What about Service Fabric?!" They both are able to orchestrate containers, yes, but they are aimed at totally different use-cases and are not the same. But we digress.

Azure Kubernetes Service has been improved a lot by the open-source work that is going on:

- Virtual Nodes is using Virtual Kubelet to allow you to overflow workloads to Azure Container Instances

- Azure Policy integration uses Gatekeeper

- Azure Key Vault integration is integrating with Secret Store CSI driver interface

- Cluster autoscaling is using Cluster Autoscaler

- Open Service Mesh is providing a service mesh that is Service Mesh Interface-compliant

- KEDA is bringing event-driven application autoscaling to Kubernetes

- ...

One of the best examples of how Kubernetes is being adopted is Microsoft’s hybrid and on-premises strategy. It has gone through various iterations and in its latest phase it is fully standardizing on Kubernetes with Azure Arc, instead of building their own stack (again).

This not only allows customers to have a unified way of managing and operating their Kubernetes cluster, but also bringing all the open-source goodness and Azure cloud services to the customers where they need it. Azure application services with Azure Arc is one of the best examples where it brings Azure PaaS to the customers that cannot go to Azure.

Azure Event Grid is another example that is embracing open standards! It allows you to rely on CloudEvents as the event scheme instead of Azure’s homegrown scheme. This allows you to be more flexible, reduce lock-in and not leak/enforce your internal infrastructure to your customers.

So, frankly, why would anyone use a proprietary scheme over an open alternative? I think legacy should be the only acceptable answer. There are no indications, but that’s probably the only reason why that scheme is still around.

It is no surprise that Clemens Vasters is part of this team, as the integration has been seamless from the start.

Vendor lock-ins, portability and multi-cloud

This area has been growing over the last couple of years where customers want to be able to easily port their platform to another vendor and/or host it on multiple clouds.

Let’s be clear, there is no “easy” way to do this but it’s becoming easier though.

Using open standards is always a great place to start to avoid using proprietary protocols, schemes and endpoints. CloudEvents is a great example of a data structure for describing events allowing you to be flexible and easily integrate with various cloud services, tools and products. If only Kubernetes would show the way and adopt it…

Service Mesh Interface (SMI) is another great example that allows you to use any supported service mesh, but rely on an open standard to manage it. This is a great way for other systems to integrate it as well, for example, KEDA is looking to use SMI to get metrics of service meshes without having to know what kind of mesh it is.

Containerization is another key aspect that allows you to decouple your application from the underlying infrastructure. That’s why I always use containers and start hosting them on Azure Web Apps for Containers. If we ever have to migrate them to Kubernetes we are “ready to go”.

Standardizing your platform on Kubernetes as a company is another approach but that requires you to invest a lot in it. This can be combined with Azure Arc that gives you a single-pane-of-glass for all your clouds/vendors and centralizes things.

Like we’ve just discussed, you can also standardize on Azure PaaS which you host on Azure, and use Azure application services with Azure Arc for other clouds which brings a more PaaSy touch to it, and I’m only expecting this area to grow more.

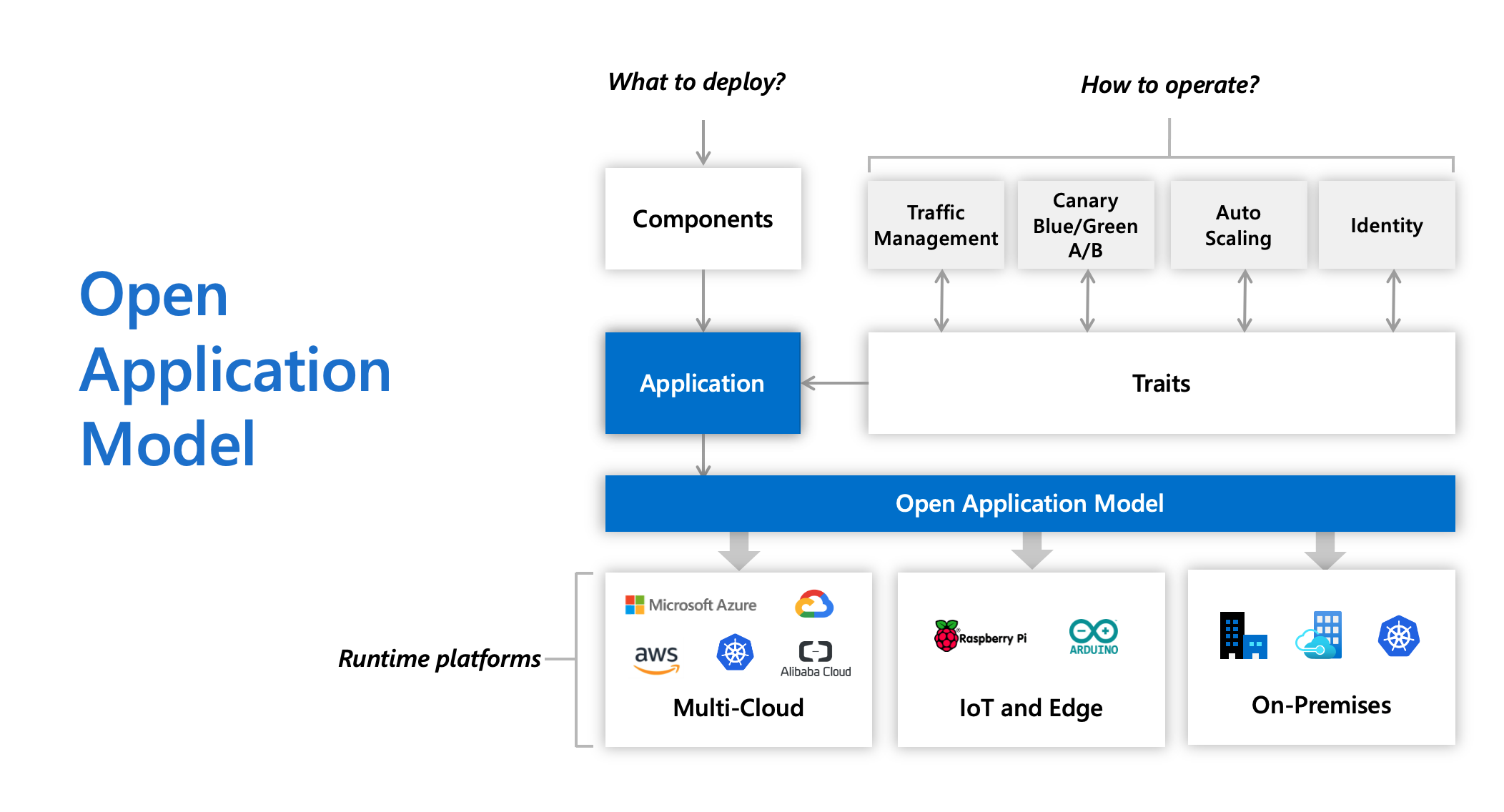

Then there is Open Application Model (OAM), an open standard for scribing what cloud-native apps look like. This includes both the application & infrastructure to describe the various components of your application without explicitly relying on a runtime platform:

This is very promising and Microsoft was one of the creators of it, but unfortunately there is no Azure support for it to date, but there is for Kubernetes through Crossplane if you want.

But in the end, you will always have some lock-in, the question is - How big is the lock-in and what is the impact?

Using a standard also means that you will have a lock-in, but most probably you will be more flexible. Using a product by a vendor is can have different flavors as well:

- Service-level lock-in - Managed product that is closed-source and you do not have an escape hatch

- Open Core - The core of the product is open source and available as a managed offering, but not all of the features are open source

- Runtime-level - The product is open source and available as a managed offering, but you can host it yourself

The example that I always use is Azure Functions - Which is an Azure service that you can go and deploy serverless applications to. People tend to think that this means it has a big lock-in into Azure, but since the runtime is open source, you can easily port your serverless workloads to anywhere (edge, cloud, on-prem). However, you will pay the price because now you are in charge of scaling, managing, … all of it.

Reducing lock-ins and being able to port your platform anywhere clearly is not that simple, but the are certainly are options and it depends on what you really want to achieve.

Some things to keep in mind:

- Open source does not mean free - Always make sure you are using it according to its license and it comes with trade-offs - For example if you host it, you run it.

- Product ownership - Is it licensed by a single company or is it part of a foundation? Who has control over it?

- Support - Can you call somebody in the middle of the night?

Do you want to reduce the lock-ins as much as possible, at the cost of doing a lot more yourself? Or do you want to be able to use another vendor, if you will ever have to?

And most importantly, how important is this requirement? Will you ever have to run on multiple clouds?

Making it easier to focus on building, instead of infrastructure

Last but not least, let’s have a look at a few things that help you focus on your applications and not the infrastructure.

Dapr helps you build distributed applications by providing various building blocks that you can use to build your applications. As part of it, it helps you abstract your dependencies away from your application so that you don’t have to worry about that in the app and it becomes an infrastructure detail.

Donovan Brown nicely explains on MJFChat that you just want to store and read certain information, but you don’t care where it’s coming from.

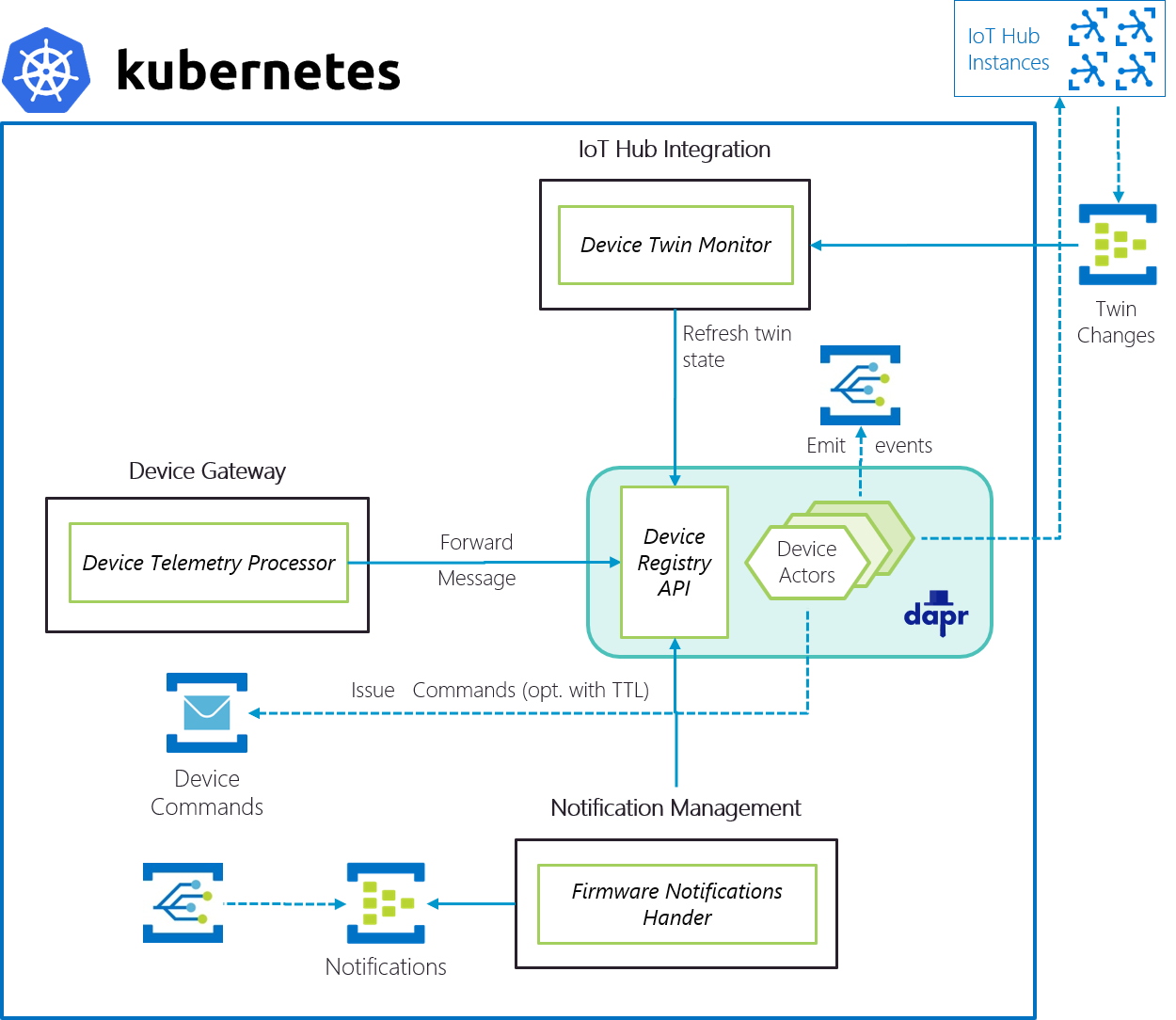

My favorite component of Dapr are the virtual actors. They allow you to design your applications by using the actor pattern and host them, for example, on Kubernetes.

In a POC that I’ve done to play around with it, I’m using them to build a custom cloud gateway for IoT devices in the field that are virtually represented in the cluster with a device actor:

And then there is Kubernetes Event-driven Autoscaling (KEDA). Clearly I am biased about this project but I’ve seen the evolution of autoscaling applications on Kubernetes and I’m happy that KEDA is making app autoscaling dead-simple on Kubernetes.

Before KEDA, you had to be a Kubernetes expert to understand what it requires to achieve app autoscaling for external metrics and use tools such as Prometheus & Promitor just to get started, but that’s a lot of infrastructure to manage.

With KEDA, you just define the autoscaling and everything is managed for you! Don’t believe me? Have a look (link)!

In our last blog post, we will have a look at how Microsoft is giving back to open source.

Thanks for reading,

Tom.

Photo by Chris Briggs on Unsplash